As artificial intelligence (AI) becomes embedded in nearly every stage of the talent lifecycle – from recruitment and performance reviews to leadership development and succession planning – organizations are gaining new capabilities at scale.

But with the benefits of time and cost savings come real risks, especially when it comes to fairness and equity.

Artificial intelligence bias – the systematic and unfair distortion of data or outcomes by AI systems – can quietly undermine talent outcomes and trust in leadership.

AI systems are only as objective as the data and assumptions behind them. If left unchecked, they can amplify historical biases, reinforce stereotypes, and obscure the very qualities organizations say they value in leaders. In this article, we’ll explore where these risks arise, how to evaluate your systems, and what steps talent leaders can take to ensure their processes remain ethical, inclusive, and effective.

Advising leaders will always be a fundamentally human-centric part of our business and will, at its core, remain this. Having said that, we utilize AI when possible to automate areas where human-human interactions are not directly involved but where back-office processes can be automated.

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

What Is Artificial Intelligence (AI) Bias?

What Is Artificial Intelligence (AI) Bias?

AI bias occurs when an algorithm produces systematically unfair outcomes for certain groups of people. This can result from biased training data, flawed model assumptions, or skewed decision criteria. Even well-intentioned tools can deliver inequitable results if not carefully designed and monitored.

Bias isn’t always obvious – it’s often embedded deep in the underlying assumptions of an AI Model.

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

Why AI Bias Matters

Why AI Bias Matters

AI bias can lead to discriminatory outcomes, expose companies to legal risk, and erode trust among employees. It can also sabotage inclusive initiatives by embedding exclusion into the very systems meant to optimize talent.

Although humans can also be biased when evaluating and developing talent (and most likely are), there is a different type of risk associated to bias in autonomous models. Outcomes can be significantly skewed in one direction and without human supervision, you may not understand the cause. Human supervision helps work toward not just unbiased AI but explainable AI outcomes.

Common Types of AI Bias in Talent Development

Common Types of AI Bias in Talent Development

AI-driven talent tools have been shown to replicate and even magnify biases based on gender, race, age, and educational background.

For example:

- A resume screening tool may downgrade candidates who didn’t attend “top-tier” universities, disproportionately affecting candidates who didn’t attend those schools.

- A leadership potential model trained on past promotions may reward extroversion or confidence over actual performance.

- Learning algorithms may recommend less strategic training content to women or minorities due to biased historical data.

These biases are not just technical glitches – they can result in real harm to careers, culture, and organizational reputation.

Where AI Bias Emerges in the Talent Development Lifecycle

Where AI Bias Emerges in the Talent Development Lifecycle

Artificial intelligence is transforming nearly every phase of talent development, from hiring and onboarding to leadership planning. But with that transformation comes a critical challenge: ensuring these technologies don’t reinforce the very inequities organizations are trying to dismantle. AI systems are only as objective as the data and assumptions behind them – and when those reflect historical or systemic biases, the outcomes can be both subtly exclusionary and broadly damaging.

To truly harness the promise of AI in HR, talent leaders must understand where and how bias shows up across the employee journey. It’s not just about what tools are used, but about how they’re trained, implemented, and interpreted. From resume screening to succession planning, even small algorithmic decisions can create ripple effects on who gets seen, supported, and selected.

The following breakdown highlights four critical inflection points in the talent lifecycle where AI-driven bias is most likely to emerge – and where intentional oversight is most urgently needed.

Recruitment and Candidate Screening

Recruitment and Candidate Screening

Automated tools used to screen resumes, score candidates, and predict job fit often rely on historical hiring data. If those datasets reflect past preferences for certain schools, job titles, or demographics, the AI may unintentionally filter out qualified but non-traditional candidates.

For example:

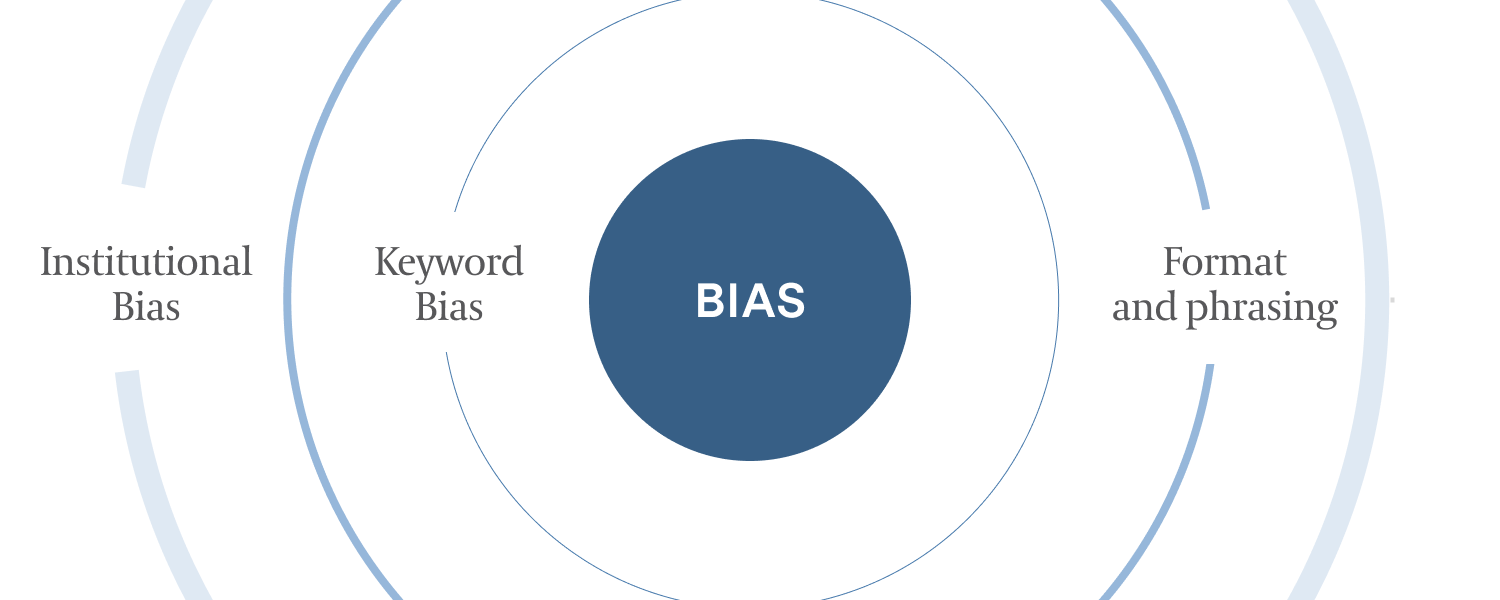

- Keyword bias: Candidates from different industries or underrepresented groups may use alternative language to describe similar experiences, and be penalized for not matching expected keywords.

- Institutional bias: Overemphasis on certain schools or companies may disadvantage applicants with equivalent potential from less recognized institutions.

- Format and phrasing: AI systems may favor resumes formatted a certain way or using specific “power” words, which can correlate with gendered communication styles.

Performance Evaluation and Promotions

Performance Evaluation and Promotions

AI systems used to assess employee performance, identify promotion readiness, or flag high-potential talent often pull from biased or inconsistent data, such as subjective performance reviews or sales figures that don’t account for territory differences or support resources. As a result:

- Reinforced managerial bias: If a manager has historically undervalued certain team members, those patterns can be perpetuated algorithmically.

- One-dimensional KPIs: Metrics like revenue generated or hours logged may not reflect the full scope of leadership capabilities or teamwork contributions.

- Penalizing career interruptions: Time off for caregiving, health, or part-time roles may unfairly impact performance scores without context-aware analysis.

These systems can unintentionally reinforce exclusionary patterns, especially for women, caregivers, or employees from non-dominant cultural backgrounds.

Learning and Development Recommendations

Learning and Development Recommendations

Many organizations use AI-powered platforms to recommend training modules, suggest mentors, or create personalized growth pathways. But if the training data or algorithms reflect a narrow view of success, the recommendations can become self-reinforcing:

- Algorithmic homogeneity: Employees may be steered toward learning paths that mirror those of their demographic peers, rather than those that reflect their individual potential or ambitions.

- Unequal stretch opportunities: AI may under-recommend high-impact projects or leadership prep to employees in historically excluded groups, especially if they haven’t followed traditional advancement trajectories.

- Limited behavioral nuance: If AI relies too heavily on role modeling (i.e., mimicking the development paths of prior leaders), it may fail to capture emerging or unconventional leadership archetypes.

This creates a cycle where access to transformative development is subtly limited by outdated assumptions about who will succeed.

Succession Planning and Leadership Readiness

Succession Planning and Leadership Readiness

Bias also emerges in models used to assess readiness for succession or advancement into senior roles. Leadership assessments powered by AI may prioritize traits historically associated with dominant leadership styles – such as assertiveness or competitiveness – while undervaluing qualities like collaboration, empathy, or resilience.

These models may:

- Favor past archetypes over future needs, particularly when companies are aiming to evolve their leadership profile.

- Underestimate diverse talent who haven’t had the same visibility, feedback, or advocacy as others on the succession slate.

- Overlook systemic barriers like limited access to sponsor relationships or informal leadership development.

3 Ways to Audit AI Tools for Bias

3 Ways to Audit AI Tools for Bias

As organizations increasingly rely on artificial intelligence to support hiring, development, and succession decisions, it's critical to ensure these tools are working for inclusion – not against it. Even well-intentioned AI systems can produce biased outcomes if they’re trained on narrow datasets, optimized for the wrong indicators, or deployed without oversight.

Auditing your AI tools isn’t a one-time task – it’s an ongoing responsibility that requires vigilance, transparency, and collaboration across HR, legal, data, and leadership teams. Below are three key areas to focus on when evaluating AI systems for fairness and inclusion.

1. Evaluate Data Inputs

1. Evaluate Data Inputs

Start by asking: Is the training data representative of your workforce? Does it include a range of demographics, career paths, and performance indicators? If not, the model may under-serve or mischaracterize some employees.

Your AI is only as fair as the data you feed it. Audit your inputs before trusting the outputs.

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

2. Review Model Outputs

2. Review Model Outputs

Regularly test for disparate outcomes by race, gender, age, and other protected characteristics. Disparities in scoring, promotion likelihood, or development opportunities should prompt deeper investigation.

3. Collaborate with Data Scientists and Legal Teams

3. Collaborate with Data Scientists and Legal Teams

Bias mitigation isn’t just HR’s job. Cross-functional collaboration ensures AI tools comply with privacy standards and emerging regulations like the EU AI Act or U.S. EEOC guidance.

Mitigating AI Risk: Best Practices for Talent Leaders

Mitigating AI Risk: Best Practices for Talent Leaders

Even the most sophisticated AI tools can introduce unintended bias if left unchecked. That’s why successful implementation isn’t just about the technology – it’s about the human systems wrapped around it.

The practices below outline how to bring rigor, accountability, and fairness to AI-enabled talent decisions:

- Human oversight and governance: AI should inform – not replace – human decision-making. Establish clear review checkpoints where people can validate or challenge algorithmic recommendations. Consider tasking an AI review board, consisting of both technical and non-technical roles from business, HR, and legal, to evaluate and monitor AI use cases.

- Regular bias testing and model retraining: Set a cadence for bias audits and model retraining. As your workforce evolves, your AI systems must adapt. Regular reviews prevent drift and unintended consequences.

- Vendor accountability: When sourcing third-party platforms, ask:

- What bias mitigation practices are in place?

- Can we review the model’s decision logic?

- Are there clear audit trails?

The Role of Transparent Communication and Ethical Leadership in Addressing AI Bias

The Role of Transparent Communication and Ethical Leadership in Addressing AI Bias

To effectively address AI bias in talent development, transparent communication and ethical leadership must be foundational. Organizations should invest in building AI literacy across HR and talent teams so they can engage with these tools knowledgeably and responsibly. This means helping leaders understand how AI systems are trained, where bias can emerge, how to interpret outputs, and what red flags to watch for. Equally important is being open with employees about when and how AI is being used – whether in promotion decisions, development recommendations, or performance assessments.

Transparency fosters trust.

Employees are more likely to embrace AI when they understand its purpose, have opportunities to give feedback, and know there are human checks in place to ensure fairness. By combining education with open dialogue, organizations can create a culture that values accountability and equity in every AI-supported decision.

People deserve to know when a machine is shaping their career path – and to understand how.

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

Christoph WollersheimCo-Leader of Egon Zehnder’s Artificial Intelligence Practice

Lead Talent Development Responsibly in the Age of AI

Lead Talent Development Responsibly in the Age of AI

Artificial intelligence offers tremendous potential to accelerate leadership development and unlock untapped talent – but only when deployed with intentionality, transparency, and robust human oversight. Left unchecked, AI bias can quietly erode inclusivity and derail promising careers. Proactively identifying and mitigating these risks isn’t just a technical issue – it’s a leadership imperative.

Now is the time to ask critical questions of your systems, your vendors, and your internal processes. A more inclusive, future-ready talent strategy begins with understanding where AI supports progress – and where it might be silently holding you back.

The Strategic Role of the Chief AI Officer

The Strategic Role of the Chief AI Officer

As artificial intelligence reshapes industries, the Chief AI Officer (CAIO) has emerged as a critical C-suite role – one that combines technical fluency, ethical stewardship, and enterprise-wide vision. No longer relegated to R&D or isolated innovation hubs, today’s CAIO is responsible for embedding AI across the organization in ways that accelerate value creation while safeguarding trust, compliance, and responsible use.

The CAIO's role is not just about selecting the right algorithms. It’s about helping the enterprise navigate systemic transformation. This includes reimagining operating models, redesigning talent and data strategies, and influencing board-level discussions on AI risk, innovation, and governance. In many cases, the CAIO becomes a bridge between technology and business leadership – fluent in both data science and organizational dynamics.

Successful Chief AI Officers must also anticipate regulation, navigate evolving ethical frameworks, and ensure that AI is implemented in ways that align with company values. Their leadership sets the tone for how AI interacts with people – from customer experience to internal talent development.

At Egon Zehnder, we’ve seen firsthand how transformative the right CAIO can be. Through our global executive search and advisory capabilities, we help organizations identify AI leaders who combine technical depth with enterprise leadership, systems thinking, and a bold, responsible vision for the future.

As organizations shift from experimenting with AI to scaling it, the CAIO will be pivotal not only to digital acceleration – but to the company’s license to operate in a world increasingly shaped by algorithms.

Partner with Egon Zehnder

Partner with Egon Zehnder

As a global leadership advisory firm, Egon Zehnder combines deep expertise in executive search, succession planning, and leadership development with a principled approach to technology adoption. Our consultants help organizations evaluate the integrity and fairness of AI-enabled tools, align them with enterprise DEI goals, and build resilient, human-centered talent ecosystems.

Whether you're building a new leadership pipeline, refining your assessment strategy, or ensuring AI supports – not replaces – judgment and inclusion, Egon Zehnder can help you lead with clarity and confidence in the AI era.